Why Is It Vital to Address Generative AI Output Issues in the Current Landscape

Introduction

Generative AI is reshaping various sectors, from entertainment to healthcare. Its growing presence brings both excitement and responsibility. Addressing output issues is crucial for ethical and effective use. Ignoring these challenges could lead to significant consequences for individuals and organizations alike.

Summary and Overview

Generative AI refers to advanced technologies capable of creating content, including text, images, and audio. These systems, powered by large datasets and deep learning models, have gained traction across industries. As organizations adopt generative AI, they face several challenges, including ethical dilemmas, biases, and legal complexities.

Ethical concerns arise from AI’s potential to produce harmful or misleading content. Issues of bias can lead to unfair outcomes, affecting marginalized groups disproportionately. Additionally, the legal landscape surrounding generative AI remains uncertain. Existing regulations struggle to keep pace with rapid advancements, raising questions about accountability and ownership of AI-generated content.

The article will focus on three main areas: the ethical implications of generative AI outputs, the need for robust legal frameworks, and practical considerations organizations must address to ensure responsible use. By understanding these aspects, businesses can harness generative AI’s potential while minimizing risks.

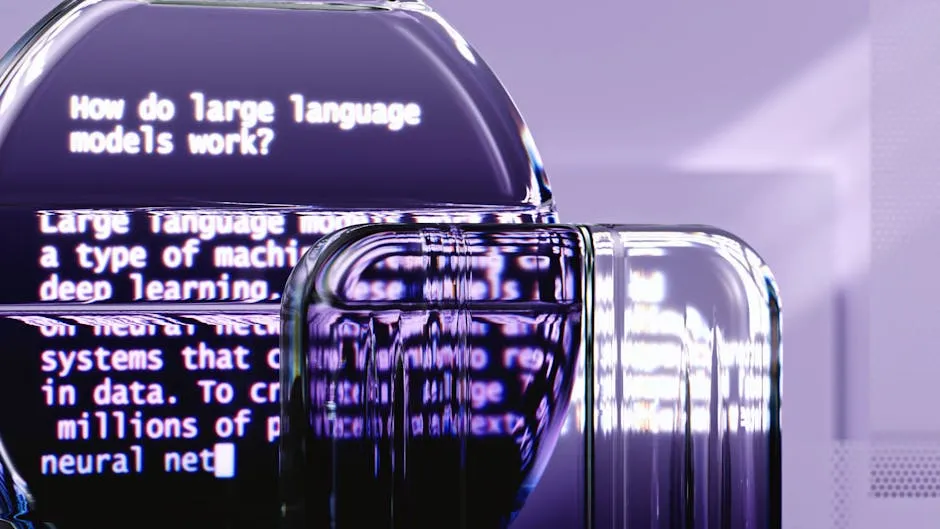

Current State of Generative AI

Generative AI has advanced rapidly in recent years. Technologies like large language models (LLMs) and deep learning algorithms are now capable of producing high-quality content. From text and images to music and video, these models can create diverse outputs. The release of tools like ChatGPT and DALL-E has sparked widespread interest and implementation.

Statistics highlight this surge. A recent survey found that 79% of businesses have engaged with generative AI in some capacity. Furthermore, about 22% use it regularly in their operations. Industries such as marketing, finance, and healthcare are integrating these technologies to enhance efficiency and creativity. The potential for innovation is significant, with many organizations expecting generative AI to transform their workflows.

Key players in the generative AI space include OpenAI, Google, and IBM. These companies lead the charge in developing advanced models and applications. Emerging technologies, such as multimodal AI, enable systems to generate outputs in various formats. As generative AI matures, its impact on industries is becoming increasingly profound, driving new business models and strategies.

Ethical Implications of Generative AI Outputs

The rise of generative AI brings ethical dilemmas that cannot be ignored. One major concern is bias within AI outputs. If the training data contains biases, the generated content may reflect and perpetuate these biases. This can lead to discrimination against marginalized groups, resulting in harmful consequences.

Transparency is another pressing issue. Many generative AI systems operate as “black boxes,” making it difficult to understand their decision-making processes. This lack of clarity can erode trust in AI technologies. Stakeholders demand transparency in how AI models are trained and how they operate, which is essential for accountability.

Moreover, the potential for misuse of AI-generated content raises ethical questions. From creating deepfakes to spreading misinformation, the risks are significant. Organizations must grapple with the implications of these technologies while ensuring ethical practices. Striking a balance between innovation and responsibility is crucial in navigating the evolving landscape of generative AI.

To dive deeper into the ethical aspects, consider reading The Ethics of Artificial Intelligence and Robotics. This resource provides a comprehensive overview of the ethical considerations surrounding AI technologies.

Addressing Bias in Training Data

Bias in training data can lead to skewed AI outputs. If the data used to train generative AI models reflects societal biases, the results will likely mirror these prejudices. For instance, a hiring AI trained on historical employment data may favor certain demographics over others. This could result in discriminatory hiring practices, impacting marginalized groups disproportionately. Such biases can lead to real-world implications, such as perpetuating stereotypes or limiting opportunities for underrepresented individuals.

Importance of Transparency

Transparency in AI decision-making is crucial. When users understand how AI models arrive at their conclusions, they are more likely to trust these systems. This transparency fosters accountability and enables stakeholders to assess the reliability of AI outputs. Explainability helps demystify AI processes, allowing users to identify potential flaws or biases. Ultimately, transparent AI systems build confidence among users, promoting responsible adoption and use.

Legal Frameworks and Responsibilities

The legal landscape surrounding generative AI is evolving rapidly. Currently, various regulations address AI usage, including data protection laws like the GDPR. These laws aim to safeguard personal data and ensure that organizations use AI responsibly. However, gaps exist in these frameworks. Many regulations struggle to keep pace with the rapid advancements in AI technologies, leaving room for exploitation and misuse.

For instance, intellectual property laws remain unclear regarding ownership of AI-generated content. When an AI creates something new, questions arise about who holds the copyright—the developer, the user, or the AI itself. This ambiguity can lead to legal disputes and confusion in the marketplace.

Moreover, existing laws often lack specific provisions for accountability in AI deployment. Companies may face challenges ensuring compliance with data protection laws while leveraging AI’s capabilities. As generative AI continues to grow, the need for updated legal frameworks becomes increasingly urgent to address these complexities.

In response, lawmakers are beginning to draft new regulations. The EU’s forthcoming AI Act aims to provide clearer guidelines on the use of generative AI, emphasizing transparency and accountability. By establishing robust legal frameworks, stakeholders can better navigate the potential pitfalls of AI technologies, ensuring ethical and responsible use.

Ultimately, addressing these legal gaps is vital for fostering innovation while protecting individual rights and societal values. Organizations must stay informed about evolving regulations and proactively adapt their practices to ensure compliance and mitigate risks associated with generative AI.

To explore the implications of AI’s impact on the workforce, check out AI and the Future of Work by Thomas H. Davenport, which delves into how AI will reshape job roles and industries.

Intellectual Property Concerns

Generative AI raises significant questions about copyright and ownership. When AI creates content, who owns it? Is it the developer of the AI, the user, or the AI itself? Current laws struggle to address this ambiguity. Recent litigation highlights these challenges. For instance, the case of Getty Images against Stability AI revolves around whether AI-generated images infringe copyright. Such disputes could reshape the landscape of intellectual property rights. Companies must navigate these complexities carefully to avoid legal pitfalls while leveraging AI’s capabilities for innovation.

Data Privacy Issues

Generative AI can unintentionally compromise data privacy. These systems often require large datasets, which may include sensitive personal information. If not handled properly, this data can be exposed, leading to breaches. Compliance with regulations like GDPR is essential to protect user data. Organizations must ensure they collect and process data responsibly, minimizing risks associated with AI outputs. Failure to do so could result in legal repercussions and damage to reputation, emphasizing the need for stringent data governance practices.

Best Practices for Managing Generative AI Outputs

Organizations using generative AI should prioritize effective management to ensure ethical and responsible use. First, establish clear AI governance frameworks. This involves defining roles and responsibilities for AI oversight, ensuring accountability in AI deployment. Second, implement comprehensive data privacy policies. Organizations must ensure compliance with data protection regulations, like GDPR, to safeguard personal information.

Third, foster an ethical AI culture within the organization. Provide employees with training on responsible AI practices. Encourage discussions around bias, transparency, and accountability in AI outputs. This promotes a shared understanding of the ethical implications of generative AI.

Additionally, organizations should regularly audit AI systems to identify and address potential issues. This includes evaluating training data for bias and ensuring transparency in AI decision-making processes. By proactively addressing these concerns, organizations can build trust among stakeholders and mitigate risks associated with AI technology.

For those looking to deepen their technical understanding, consider Deep Learning by Ian Goodfellow, a foundational text for understanding the intricacies of AI development.

Furthermore, consider establishing partnerships with experts in AI ethics and law. Collaborating with external organizations can provide valuable insights and help navigate the evolving regulatory landscape. This approach not only enhances compliance but also positions the organization as a leader in responsible AI use.

In summary, managing generative AI outputs requires a multifaceted approach. By focusing on governance, ethical practices, and ongoing evaluation, organizations can harness the potential of AI while minimizing risks and fostering public trust.

Developing Clear Policies

Creating internal policies for generative AI is essential. These guidelines ensure a responsible approach to AI usage. Policies should clearly define acceptable use cases, outlining what employees can and cannot do with AI tools.

Key elements to include are data privacy measures, ethical standards, and compliance with existing laws. It’s crucial to specify how to handle sensitive information and address bias. Regular audits and updates to the policies can help organizations remain aligned with evolving technology and regulations. By establishing clear policies, companies can foster a culture of accountability and trust in generative AI.

Training and Awareness

Ongoing training on AI ethics and compliance is vital for employees. A well-informed workforce can navigate the complexities of generative AI responsibly. Regular workshops and seminars can enhance understanding of ethical considerations, promoting a culture of awareness.

Integrating AI literacy into organizational culture can be achieved through diverse methods. For example, onboarding programs can include AI ethics modules. Encouraging discussions around real-world scenarios can also deepen understanding. By prioritizing training, organizations empower employees to use generative AI effectively and ethically, minimizing risks.

The Future of Generative AI and Potential Solutions

The future of generative AI looks promising, but challenges remain. As this technology matures, we can expect significant advancements in how AI interacts with human creativity. Emerging technologies, like advanced neural networks, will enhance AI’s ability to generate high-quality outputs across various formats. These innovations could lead to groundbreaking applications in fields like art, journalism, and healthcare.

Moreover, collaboration between tech companies and academia will drive responsible AI development. This partnership can foster ethical guidelines and frameworks, ensuring that generative AI is used for good. For instance, federated learning allows AI models to learn without compromising user data privacy.

As generative AI evolves, addressing existing issues is crucial. Transparency will become even more important. Users must understand how AI systems make decisions. This clarity builds trust and accountability, essential for widespread adoption. Additionally, regulatory frameworks will need to adapt to the changing landscape. New policies should focus on intellectual property rights, data privacy, and ethical standards.

In summary, the future of AI holds great potential. By embracing emerging technologies and prioritizing ethical considerations, stakeholders can harness generative AI’s capabilities responsibly. This approach will ensure that AI continues to benefit society while mitigating risks and challenges.

Innovations in AI Ethics

New frameworks for ethical AI usage are emerging, addressing the complexities of generative AI. These frameworks encourage responsible development and deployment of AI technologies. Collaboration between tech companies and regulatory bodies is vital to establish clear guidelines.

For instance, industry leaders are working together to create standards for transparency, data privacy, and accountability. These initiatives aim to balance innovation with ethical considerations, ensuring that generative AI serves society positively. By fostering open dialogue, stakeholders can navigate the evolving landscape of AI ethics, promoting trust and responsible practices.

Conclusion

In conclusion, addressing generative AI output issues is vital for responsible use. Clear policies, ongoing training, and ethical frameworks are essential components for organizations. These measures can help mitigate risks while maximizing the benefits of this powerful technology.

Stakeholders must prioritize ethical and legal considerations in deploying generative AI. By doing so, we can ensure that the technology evolves in a manner that is beneficial to all. Let’s work together to shape a future where generative AI fosters innovation and respects societal values.

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

Understanding the importance of managing AI outputs is crucial. why is controlling the output of generative ai systems important

All images from Pexels